In WWDC 2020, Apple unveiled a whole new API built exclusively for the U1 chip devices (iPhone 11, iPhone 11 Pro, and iPhone 11 Pro Max) and iOS 14. With this new API, you can easily add spatial awareness to your apps by allowing you to discover other devices, securely create a session between them and receive information about the distance and direction. Each device can create as many sessions as you want, allowing you to take advantage of a whole new level of interactions with devices nearby.

Nearby Interaction Framework

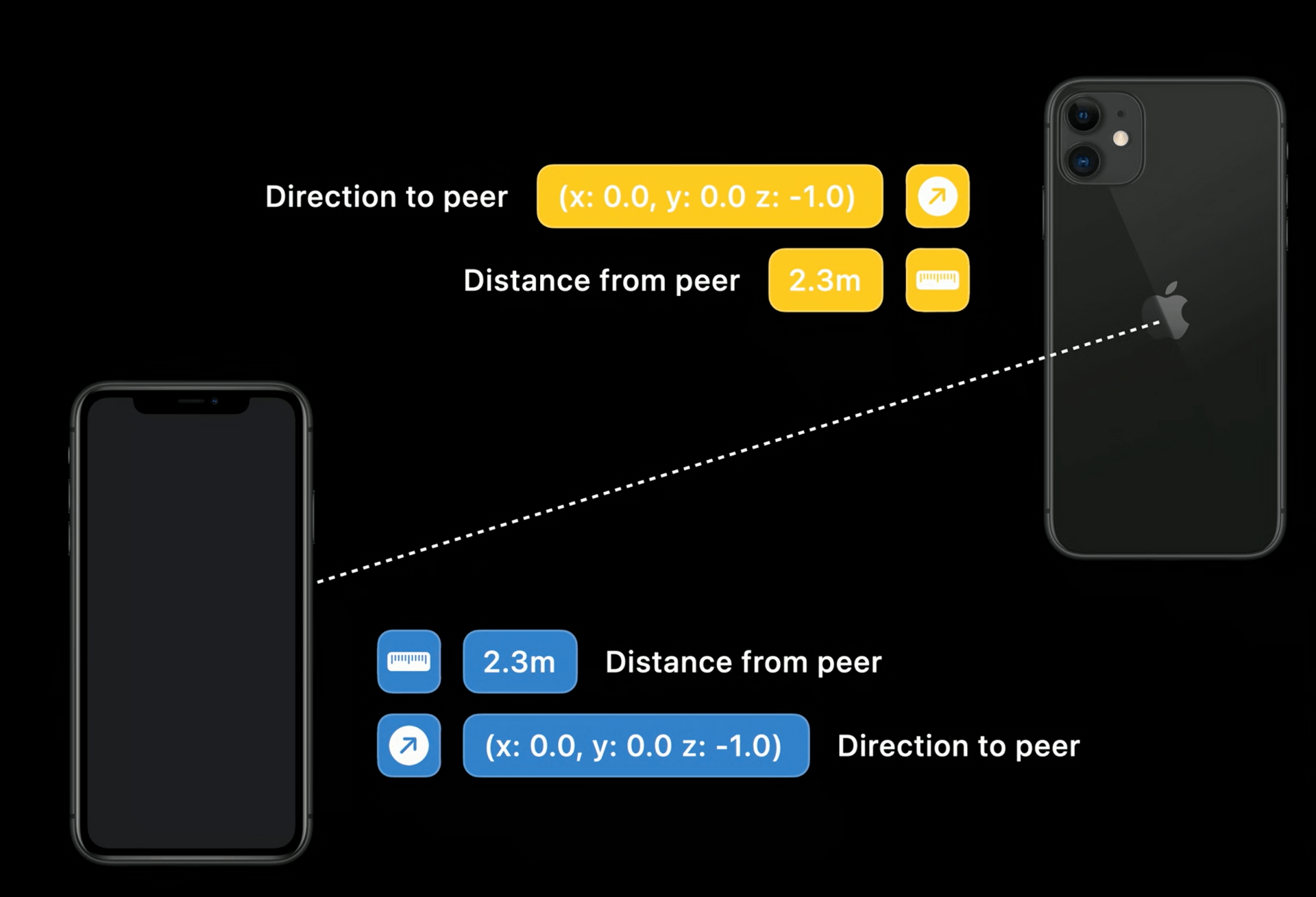

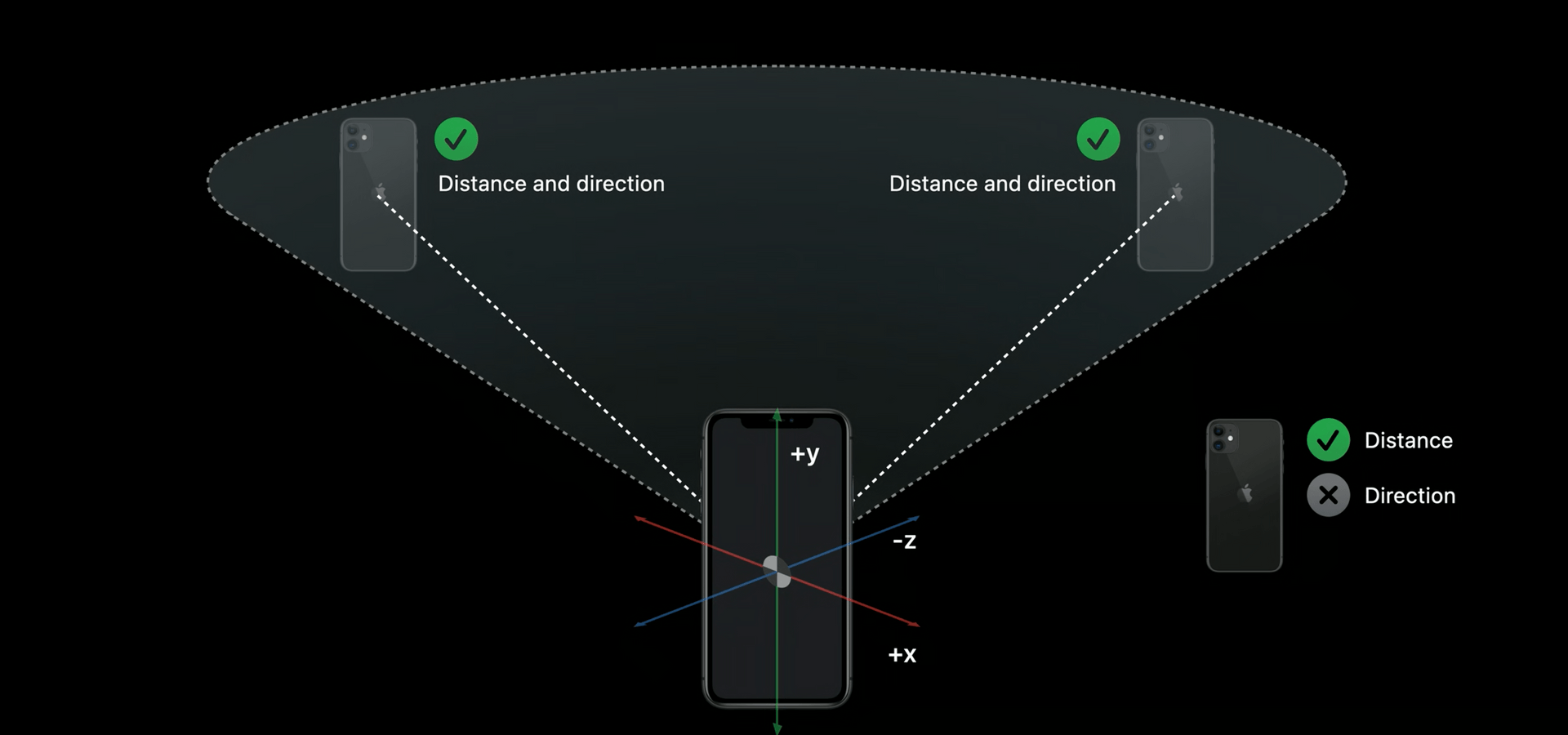

This new framework provides your app with two main types of output, a measurement of distance and a measurement of direction. When your app is running a session between 2 devices, it is able to get continuously updates about distance and direction from both devices.

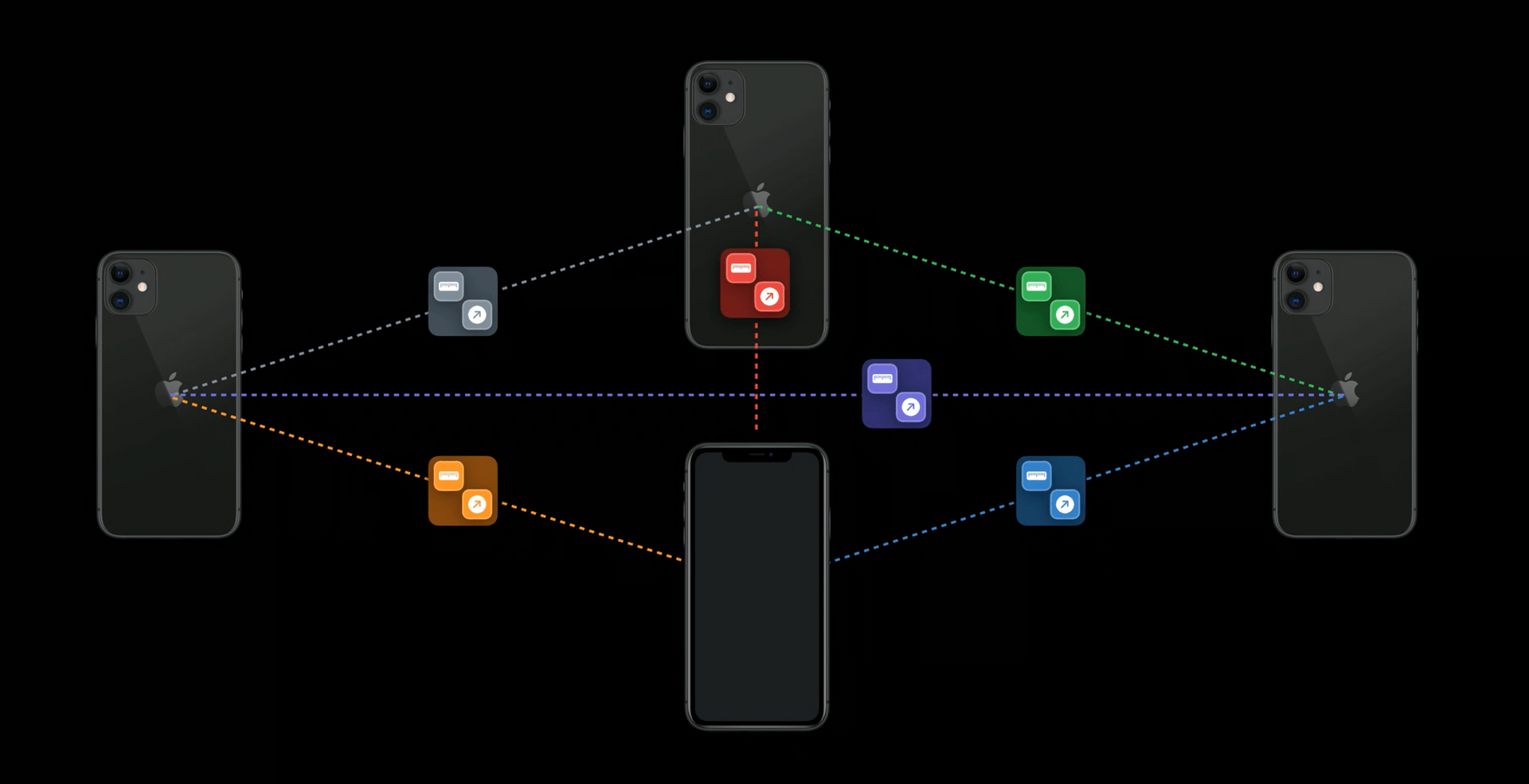

Your app is not limited to one single session, instead you can start several sessions allowing one device to interact with multiple devices at once. For example, this is what it would look like if 4 devices would be sharing a Nearby Interaction session between each other, with each device running 3 sessions in parallel:

Pretty much like other Apple frameworks, you use this API by providing a session configuration object. Basically this session configuration object will tell the system, on both devices, how to identify the other device. The key concept here is peer discovery. Devices can discover each other in a privacy-preserving manner by using something called discover tokens. These tokens are randomly generated and can only be used by one single device in a particular session. This means that this token will only be valid during the lifetime of a session. If you invalidate a session or one of the devices exits the app, that token cannot be used anymore and your app can only start a new session by exchanging new discovery tokens.

How does this look like in code?

For all this to work in your app, you should have a way to share these discovery tokens between devices, usually via your own network layer. A basic setup for a session would involve implementing these 3 methods:

var mySession: NISession?

func prepareMySession() {

// Verify hardware support.

guard NISession.isSupported else {

print("Nearby Interaction is not available on this device.")

return

}

mySession = NISession()

mySession?.delegate = self

}

func sendDiscoveryTokenToMyPeer(myPeer: Any) {

guard let myToken = mySession?.discoveryToken else {

return

}

if let encodedToken = try? NSKeyedArchiver.archivedData(withRootObject: myToken, requiringSecureCoding: true) {

//Send the encoded token across whatever transport technology you use

}

}

func runMySession(peerTokenData: Data) {

guard let peerDiscoveryToken = try? NSKeyedUnarchiver.unarchivedObject(ofClass: NIDiscoveryToken.self, from: peerTokenData) else {

print("Failed to decode discovery token.")

return

}

let config = NINearbyPeerConfiguration(peerToken: peerDiscoveryToken)

mySession?.run(config)

}

Once you call the run() method, users will be prompted with a permission dialogue that allows them to either deny it or allow to run it once. If users allow it to run once, your app will start receiving updates:

These updates are delivered by the NISessionDelegate protocol which you will need to implement before you can receive them. Below you find all the delegate methods available for you. Whenever your app receives information about nearby devices it will trigger the following delegate:

func session(_ session: NISession, didUpdate nearbyObjects: [NINearbyObject]) {

}This delegate will give you a list of nearby objects which contain information about distance and direction. You can use this information to update your UI or business logic.

Whenever a device with a valid session is no longer available or the session was invalidated, the following delegate will be triggered:

func session(_ session: NISession, didRemove nearbyObjects: [NINearbyObject], reason: NINearbyObject.RemovalReason) {

}It is also possible that a session is suspended, for example when your app is no longer in foreground. When that is the case the following delegate is triggered:

func sessionWasSuspended(_ session: NISession) {

}When a session is suspended, your app stops receiving updates for distance and direction from a specific session. If resumed, a session will not restart automatically and you will need to call the run() once again with the same configuration you used when it first started:

func sessionSuspensionEnded(_ session: NISession) {

mySession.run(config)

}Finally if a session is invalidated, the following delegate is triggered:

func session(_ session: NISession, didInvalidateWith error: Error) {

}When that is the case your app will no longer receive updates and it cannot restart a session with the same discovery token. When this is the case you will need to exchange new discovery tokens.

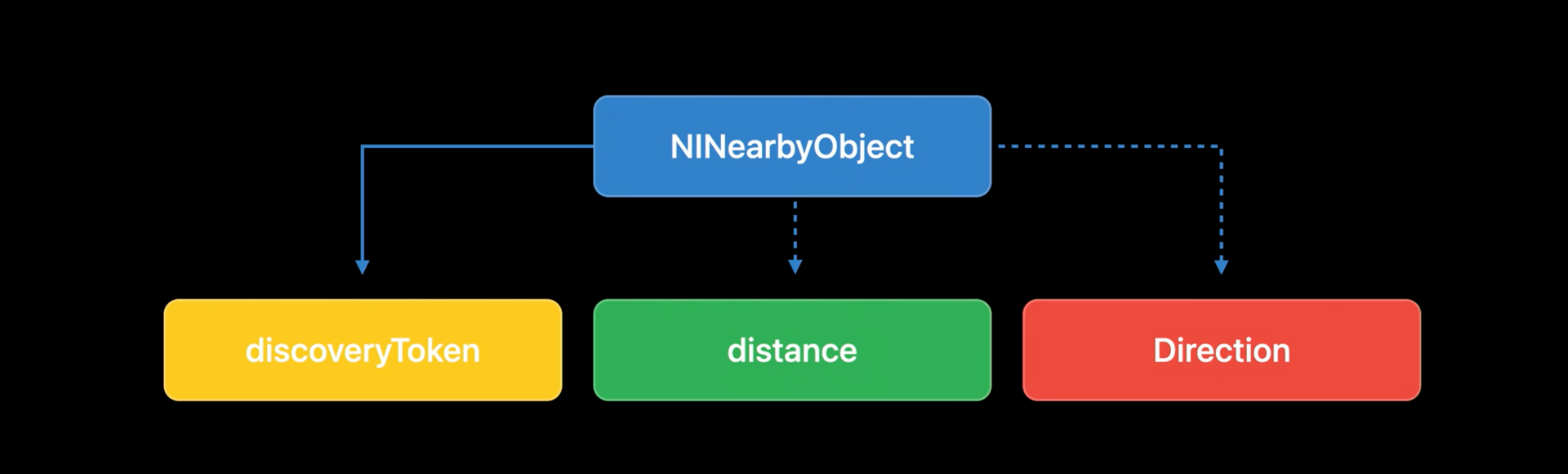

Nearby Objects

Let's deep dive into what a Nearby Interaction object is and what it contains. These will be the objects that provide the spatial output you need to make sense about other devices:

discoveryToken

This will be the token shared by a device which was provided by the configuration whenever you call the run() method.

distance

This property will be a float value (or nil) containing the measurement in meters to the device when available.

direction

This will be a 3 dimensional vector contain the x, y and z measurements (or nil) relative to the device when available.

Caveats

One thing to take into account, is that distance and direction are nullable. This means that these properties can be provided without any values. One of the reasons for that, pretty much like other sensors (e.g. camera), is that the hardware used by the Nearby Interaction is subject to a field of view. Basically the field of view is an imaginary cone coming out of the back of the device. When devices are inside this field of view, you app should expected to receive a reliable distance and direction but when a device is not inside that field of view, those devices will get a distance measurement but not a direction measurement:

There are other factors to take into account when you want to receive updates from this API. Namely, your app should understand the impact of physical device orientation. For optimal performance, this API should be used in portrait mode. If one user is running a session holding the app in portrait but another is holding it in landscape your app will not be able to retrieve reliable measurements. You should consider this when designing your user experience.

It is also important to mention that you should be mindful of occlusions. Any object or body between the field of view of 2 devices will effectively impact the reliability of these measurements. For all these factors, you should check for nullability in these properties and act accordingly in your UI.

Ready for Spatial Awareness?

If you found this article interesting and you think your app can benefit from such interactions, go ahead and download Xcode 12. You can start testing with U1 chip powered devices or simply by using multiple iOS simulator windows, which come with support for this new API. We at Notificare are also experimenting with this new capability and exploring possibilities within our platform. As always we remain available for any question you might have via our Support Channel.